Testing AI detection tools has become a weird hobby of mine lately. Some people collect stamps, some people learn guitar—I feed essays and rambling texts into machines to see if they’ll call me a robot.

Today’s trial run was with Winston AI, one of the newer players in the AI detection world. And let me tell you, it’s a curious little beast.

First Impressions

When I landed on Winston AI’s homepage, the design caught my eye right away. Clean, professional, no cartoon robots with magnifying glasses (thankfully).

It gave me the feeling of a tool meant for serious business—teachers, publishers, businesses worried about authenticity.

Logging in was smooth. No hidden hoops or endless confirmations. And within a few minutes, I was pasting text into the box and holding my breath.

But the real question buzzing in my head was: “Does Winston AI actually know the difference between me and ChatGPT, or is it going to accuse Dickens of being a bot like some other detectors do?”

Accuracy Testing: Hit or Miss?

I threw three very different samples at it:

- My own review draft – 100% human, written late at night with coffee jitters.

- A ChatGPT-generated essay – neat, structured, almost too polished.

- A classic text passage from George Orwell’s 1984.

Here’s how Winston AI judged them:

| Text Sample | Reality | Winston AI’s Verdict | My Reaction |

| My draft | Human | 96% Human | “Finally, a tool that gets me.” |

| ChatGPT essay | AI | 99% AI | “Fair enough, you caught the bot.” |

| Orwell passage | Human | 92% Human | “At least Orwell wasn’t called out as a cyborg this time.” |

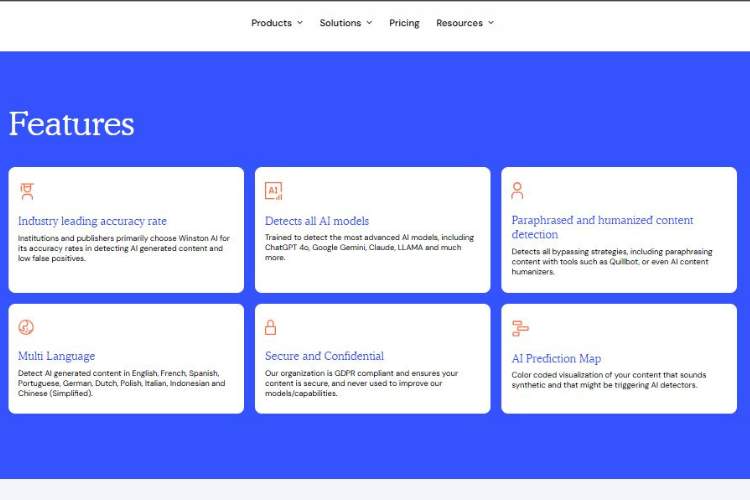

I have to admit, I was impressed. Unlike some competitors, Winston AI didn’t fall into the trap of flagging classic literature as machine-made.

It gave nuanced results, with percentages rather than blunt yes/no answers.

Still, it wasn’t flawless. Shorter passages sometimes leaned toward “AI-generated,” even when they were mine.

That’s a common issue with detectors—they need more context to be confident.

Features Beyond Detection

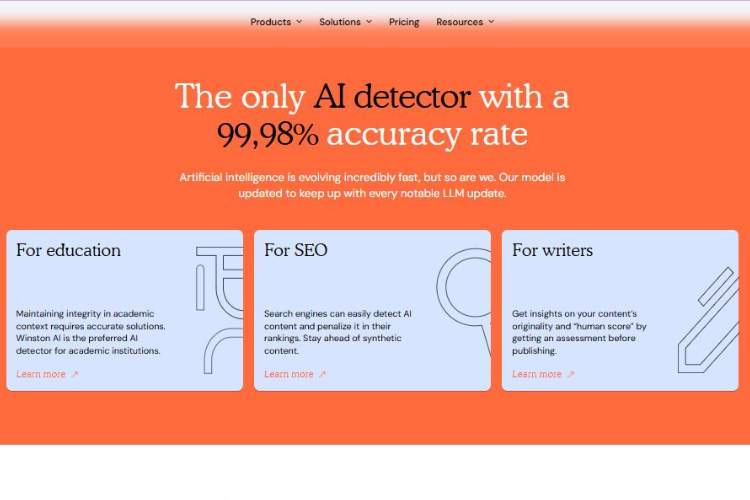

Winston AI isn’t just a one-trick pony. They’ve layered in other features that make it feel more like a professional-grade tool rather than a casual toy.

- Plagiarism Checker: It scans for overlaps with existing online content. Useful for educators and publishers.

- Reports and Dashboard: You can generate detailed reports showing detection results, which feels very “official.”

- File Uploads: Instead of pasting text, you can upload documents (PDF, DOCX, etc.). Handy for longer manuscripts or essays.

- Multiple Languages: It doesn’t just work in English—huge plus in a global context.

What struck me most was how polished the platform felt. It wasn’t clunky or half-baked. This is clearly meant for professionals who need reliable, repeatable results.

Emotional Reactions: Relief, Frustration, Curiosity

Using Winston AI felt like being judged by a strict but fair teacher. When it called my writing human, I felt validated, almost smug.

When it flagged a short snippet as possibly AI, I felt defensive, like, “Hey! I sweated over those sentences!”

There’s an emotional weight to these tools that often goes unnoticed. Writers, students, creators—we care about being recognized for our work.

When a detector gets it wrong, it stings. Winston AI, to its credit, got it right most of the time, which gave me a sense of trust.

Who It’s Best For

Based on my testing, I see Winston AI fitting in best with:

- Teachers and schools: Catching AI-assisted essays before grading.

- Publishers and editors: Verifying that submissions are authentic.

- Businesses: Ensuring website or marketing copy is original.

- Freelancers: Double-checking their own work before handing it off to clients.

But I’d warn casual users—if you’re just curious, it might feel like overkill. This tool isn’t dressed up for fun, it’s dressed for work.

Strengths vs Weaknesses

Here’s the breakdown, plain and simple:

| Strengths | Weaknesses |

| High accuracy, nuanced results | Struggles with very short texts |

| Professional interface and reports | Premium pricing may deter casual users |

| Supports plagiarism detection | AI scores sometimes swing too confidently |

| Works with multiple languages | Still can’t fully “explain” its decisions |

Bigger Picture: Can We Trust AI Detectors?

I’ve been wrestling with this question across all the tools I’ve tested. The truth? None of them are perfect.

Winston AI might be one of the more accurate ones I’ve used, but it’s still making educated guesses.

That’s the uncomfortable truth: these detectors aren’t magic lie detectors, they’re probability engines. And relying on them as absolute proof—especially in education—can be unfair.

Imagine being a student falsely accused just because an algorithm thought your style was “too polished.” That keeps me up at night.

Winston AI feels like one of the more responsible players in this field, but the larger question of ethics around AI detection still looms large.

My Final Verdict

So, would I recommend Winston AI? Yes—if you’re an educator, publisher, or professional who needs a serious tool.

It feels more reliable than most detectors I’ve tested, and it offers a wider toolkit with plagiarism checks and reports. But for casual users, it might feel heavy-handed.

Here’s my final scorecard:

| Category | Score (Out of 10) | Notes |

| Accuracy | 8.5 | One of the better ones, but not perfect. |

| Usability | 9 | Smooth, polished, professional. |

| Features | 9 | Goes beyond detection with plagiarism & reports. |

| Value | 7.5 | Great for professionals, pricey for dabblers. |

| Emotional Trust Factor | 8 | Feels fairer and less chaotic than competitors. |

| Overall | 8.5 | A strong contender in AI detection. |

Closing Thought

Winston AI is like that teacher you secretly respect: tough, methodical, rarely wrong, and not easily swayed by nonsense.

It won’t win any awards for being “fun,” but when the stakes are high and authenticity matters, it feels like a trustworthy ally. Just remember—it’s still a machine making guesses, not an all-seeing judge. And that’s an important distinction to keep in mind.