Testing AI detection tools has become something of a strange hobby for me. Some people binge-watch entire series in one sitting; I end up pasting paragraphs into detectors, watching numbers pop up, and then scribbling notes like a wannabe detective.

Today’s test subject was UnGPT, a tool that promises to tell you whether text is human, AI-generated, or somewhere in between. Spoiler: it was both more impressive and more flawed than I expected.

First Encounter

The first thing I noticed about UnGPT was how clean and uncluttered the interface looked. No overwhelming dashboards, no aggressive pop-ups—just a text box, a button, and a promise: “Find out if this is AI.”

That simplicity is refreshing, but also a little deceiving. You half-expect something this minimal to either be lightning-fast and clever, or to feel like one of those freebie clones cobbled together with duct tape.

I couldn’t help but think: If I feed it something messy, like a paragraph full of personal tangents and broken grammar, will it still catch the AI skeleton underneath? That was my starting experiment.

Core Features

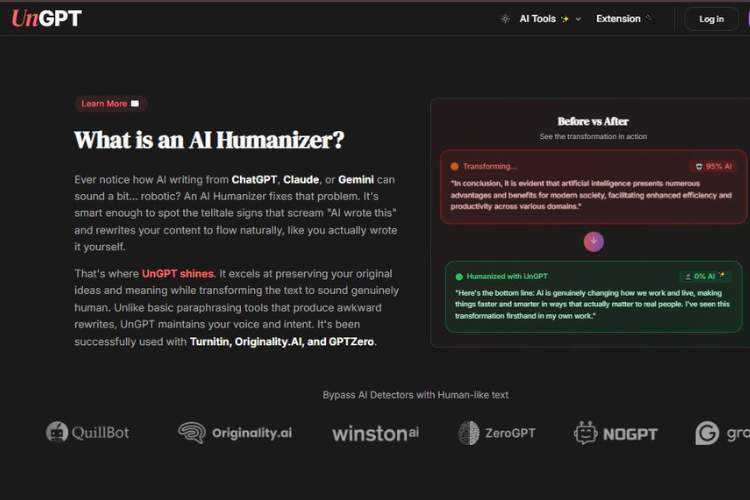

- AI Detection: Assigns a probability of whether text is machine-written.

- Detailed Breakdown: Sometimes gives insights into what made it “suspicious.”

- Multi-Language Support: Claims to work on more than just English.

- API Access: Useful for developers who want to integrate detection into workflows.

- Free and Paid Options: The free tier is decent, but premium plans unlock higher limits and deeper analysis.

Running the Tests

I ran three types of text through UnGPT:

- Pure AI output (straight from a model, untouched).

- Hybrid content (AI with my edits, quirks, and slang).

- Completely human-written rants (long-winded stories with no polish).

On the first, UnGPT flagged it without hesitation. It gave me a high probability score that basically screamed, “Yeah, no human wrote this.” Fair enough.

On the hybrid text, results got shakier. Sometimes it leaned “human,” other times it hedged in the middle with phrases like uncertain.

I actually liked that honesty—it didn’t pretend to be all-knowing. But as a user, it leaves you scratching your head. If the tool itself can’t tell, what do you do with that information?

The most surprising moment came with my purely human-written piece. One test scored it “likely AI,” which had me chuckling. Apparently, my natural tendency to repeat myself and get overly formal in moments of stress mimics machine writing. Go figure.

Where UnGPT Shines

- Simplicity: Paste text, get a score. No tutorials needed.

- Decent Accuracy on Obvious AI: If you’re testing untouched AI drafts, it’s spot on.

- Speed: Results show up almost instantly.

- API Potential: Developers could build interesting tools with it.

Where It Trips Up

- Edge Cases: Hybrid or heavily edited texts confuse it.

- Overconfidence at Times: Some human texts still get mislabeled.

- Limited Depth: It doesn’t provide as much linguistic breakdown as competitors like GPTZero or Winston AI.

Emotional Take

Here’s where I land on this: UnGPT feels like a tool made for peace of mind, not absolute certainty. It’s like having a smoke detector—it’ll alert you if there’s a fire, but it might also beep when you burn toast. And honestly, that’s not the worst thing.

If you’re a teacher trying to spot AI essays, it could be a helpful first filter. If you’re a writer worried about whether your content might get flagged, it’s a way to test the waters.

But if you’re hoping for 100% truth in every scenario, UnGPT will disappoint you. Then again, I don’t think any of these tools have that level of precision yet.

My Opinion and Who It’s Best For

- Best for Educators: It’s straightforward, so teachers don’t need a manual to use it.

- Best for Students/Creators: Good for quick checks before submitting work.

- Not Best for Researchers: If you need detailed linguistic analysis, look elsewhere.

Personally, I found UnGPT useful as a first step. I wouldn’t bet my life on its results, but I’d absolutely use it to get a quick pulse check. Think of it like asking a friend for their gut reaction—you don’t take it as law, but you value the perspective.

Final Verdict

UnGPT is a solid tool in the crowded space of AI detectors. It does its job well enough, especially when dealing with raw AI content, but it struggles with the gray areas that make human writing, well, human. My rating?

- Ease of use: 9/10

- Detection reliability: 7/10

- Explanatory depth: 6/10

- Overall value: 7.5/10

Would I recommend it? Yes, if you want something simple and fast. But no, if you’re looking for a courtroom-level verdict on whether text is human or AI.