Some tools you try once, nod politely, and move on. Others stick in your mind because they carry a certain weight. Originality.ai belongs to the second camp.

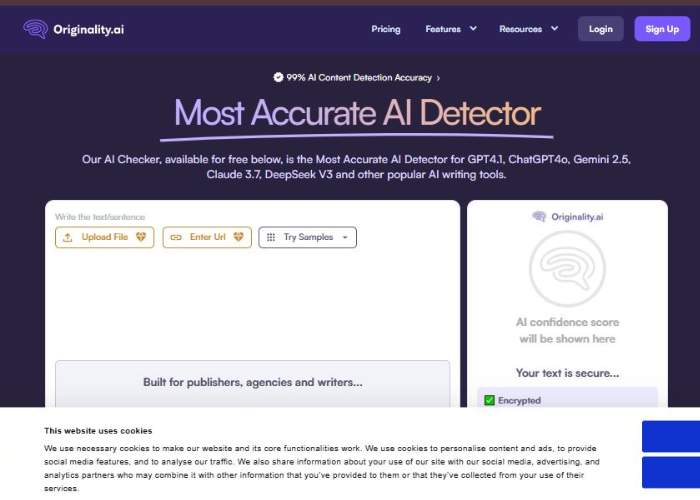

This isn’t a toy for curious students; it’s a business-grade machine that promises to sniff out both AI-generated writing and plagiarism in one sweep. Sounds impressive on paper, but does it actually deliver when the rubber hits the road?

The Feel of It

When you first log in, the vibe is… professional. No flashy colors screaming for attention, no cartoon robots wagging fingers. Just a straightforward dashboard that whispers, “I’m built for serious work.”

It’s almost intimidating, like walking into a boardroom where everyone already knows their roles and you’re the new kid trying to figure out where the coffee machine is.

I liked that. It set the tone: this isn’t here to play, this is here to work.

Features That Make It Stand Out

Originality.ai isn’t shy about being a “do-it-all” checker. Here are the main weapons in its arsenal:

- AI Detection: Claims to spot GPT-3, GPT-4, and even newer models. Instead of a blunt yes/no verdict, it spits out a percentage likelihood of AI authorship. Subtle but important.

- Plagiarism Checker: Built-in, and pretty robust. Scans across billions of web pages. For publishers or agencies, this combo is gold—you don’t need two separate tools.

- Team Accounts: You can add multiple members, assign roles, and keep track of scans in one place. That’s a godsend for agencies juggling lots of content.

- History & Reports: Every scan is logged and reportable, so you’re not left scratching your head later.

- API Access: For the developers who want to stitch it into their workflows. It’s nerd candy, honestly.

Testing It in Real Life

I didn’t want to just parrot marketing copy, so I threw some curveballs:

- A raw personal essay—rambling, late-night, caffeine-driven thoughts.

- Pure AI text—straight from GPT, untouched.

- Hybrid text—AI-generated, but I rewrote half of it with my own quirks and asides.

Results?

| Sample | Reality | Originality.ai Verdict | Reaction |

| Personal essay | Human | 99% human | Relief. It recognized the messy humanity. |

| Pure AI article | AI | 100% AI | Nailed it—clear win. |

| Hybrid piece | Half/half | 72% AI likelihood | Interesting. It sniffed out the machine influence despite edits. |

That last one got me thinking. Even when I edited, sprinkled idioms, chopped sentences—Originality.ai still flagged it as “more AI than human.” Fair? Maybe. Frustrating? A bit. But also a reminder: once AI touches text, it leaves fingerprints.

Strengths Worth Celebrating

- Accuracy with extremes: Pure human vs pure AI—it’s confident, reliable, almost ruthless.

- Double duty: Plagiarism + AI detection in one platform saves so much time.

- Professional orientation: You feel this tool was designed with businesses, agencies, and publishers in mind.

- Transparency: Percentages instead of vague labels—it treats you like an adult.

Weaknesses That Bug Me

- Pricing: It uses a credit system, which makes sense for companies, but for casual or occasional users, it feels pricey.

- Short text struggles: Paste in a paragraph and the results wobble. It’s not the tool’s fault entirely—AI detection needs more data—but still.

- Hybrid gray zone: If you use AI lightly (say, to brainstorm), Originality.ai sometimes leans heavy on the AI verdict, which could make honest writers feel unfairly judged.

- High stakes pressure: Imagine being a freelancer delivering work and seeing a client pull up a “72% AI likelihood” score. Even if you wrote half of it, that’s a tough conversation.

The Emotional Side of Using It

What surprised me was how much I felt during testing. When it said my essay was human, I grinned. When it flagged the hybrid text, I felt a little defensive, like it was accusing me of cheating when I hadn’t.

That’s the thing about tools like this—they don’t just measure text, they measure trust. And when trust is on the line, even percentages can carry a sting.

Who Should Use It

Originality.ai isn’t for everyone. Here’s who I think it suits best:

- Publishers who need to vet dozens of submissions daily.

- Agencies outsourcing content and needing guardrails.

- Businesses that can’t afford to risk AI-heavy content in their marketing.

- Freelancers wanting to prove their work is authentic (though, ironically, this can backfire if hybrids get flagged).

It’s probably not ideal for teachers catching one-off essays or hobbyists dabbling with AI writing. Too heavy-duty, too expensive for that.

My Scorecard

| Category | Score (out of 10) | Thoughts |

| Ease of Use | 9 | Smooth, no fuss. |

| Accuracy (pure texts) | 9 | Rock solid. |

| Accuracy (hybrids) | 7.5 | Good, but sometimes overestimates. |

| Extra Features | 9.5 | Plagiarism checker seals the deal. |

| Value | 8 | Excellent for pros, steep for casuals. |

| Overall | 8.5 | A serious tool for serious stakes. |

Final Thoughts

Originality.ai is the heavyweight in the AI detection ring. It’s sharp, confident, and built for pros. I walked away impressed, if also a little wary of how harsh it can be on “gray zone” texts.

But maybe that’s the price of aiming for rigor—erring on the side of caution rather than letting AI slip through the cracks. Would I recommend it? For publishers and agencies, absolutely. For casual users? Not unless you’ve got money to spare.

For me, it’s one of the best detectors out there, but like all of them, it’s not gospel—it’s a tool. And tools are only as fair as the people using them.