I’ve been using GPTZero off and on for a while now — throwing human-texts, hybrid texts, polished AI text, even heavily edited ones — trying to see how well it spots what’s real vs what’s generated. Here’s what I found.

What Is GPTZero, Anyway?

GPTZero is an AI detection tool built to flag text that seems like it was produced by large language models (LLMs) rather than by “real” humans.

It was started by Edward Tian (Princeton student), plus a few others. It came about because of concern over AI-assisted writing, cheating, plagiarism, etc.

It doesn’t just give a binary “AI / human” answer — it uses metrics like perplexity and burstiness to judge how machine-like vs human-like something is.

Perplexity is about how predictable or “smooth” the text is relative to what language models expect; burstiness captures variation in sentences — long, short, simple, complex. Humans tend to vary. AI tends (though not always) to smooth out variation.

Also, they’ve added features: sentence-by-sentence scoring, highlighting “AI vocabulary” (phrases or styles more typical of machine output), etc. The free tier gives you a taste; paid plans unlock more scans, batch uploads, more detailed feedback.

My Testing & What Stood Out

I ran GPTZero against a set of texts I prepared:

- My own essays (unedited, with messy grammar, slang, personal style)

- AI-generated text straight out of, say, a recent GPT model

- Hybrid texts: AI-write then human edit heavily

Here’s a summary:

| Sample Type | How GPTZero Scored It | Thoughts / Reaction |

| My raw human text | Mostly “human” with low % AI | Felt good. It didn’t over-flag me—important because style isn’t always polished. |

| Pure AI-generated text | High AI score (close to 100% in many cases) | Expected — that’s what it’s built for. Wasn’t perfect when the AI text was “humanized” or paraphrased, though. |

| Hybrid / edited AI text | Mixed results: sometimes flagged partially; sometimes slips through more than I’d like | This is where things get fuzzy. If an AI text is tweaked, paraphrased, edited a lot, GPTZero sometimes under-detects or gives lower confidence. |

So GPTZero is strong in clear cases, weaker when things are “in between.”

Strengths

Here are the parts where GPTZero really shines (and why I often reach for it):

- User-friendly interface: Clean, simple. Paste what you want, get feedback. Sentence highlighting (flagging parts more likely AI) is super useful to see which bits are suspicious rather than just a vague overall score.

- Free plan accessibility: You don’t need to pay to try it. For students or casual users this matters a lot. If you only occasionally want to check something, the free tier makes it possible.

- Transparency & updates: They publish when they update, what they’ve changed. That’s rare in this space. I saw info about improving detection of paraphrased / humanized content etc.

- Academic / educator orientation: It’s clear GPTZero was made in big part for schools, teachers. It includes sentence-level detail, giving users (teachers, editors) something to work with, not just a pass/fail style label.

Weaknesses & Where It Falls Short

No tool is perfect, and GPTZero has its blind spots. These are things I noticed, or saw in studies, that worry me.

- False Positives: Human text sometimes gets flagged, especially if the style is regular, formal, or uses repetitive structures. If someone writes very clean academic text (short, neat sentences, minimal slang), GPTZero might lean toward “AI-like” even if it’s pure human. That’s dangerous in academic or evaluative settings.

- Paraphrased / “humanized” AI text often evades detection or gets significantly lower confidence. If someone runs a generated text through paraphrasers, edits heavily, mixes in human style, many segments slip through or are borderline. That means it’s not bulletproof.

- Short text limitations: Very short paragraphs or sentences often don’t give enough signal. GPTZero seems to struggle to confidently assign high AI (or human) scores when there’s just a sentence or two. It’s like trying to guess a person’s accent from a single word. Not enough data. I noticed this in my tests.

- Bias & non-native English issues: Writers who aren’t native speakers, or whose style uses local idioms, or less variance in sentence structure, may be unfairly flagged (or get lower “human” confidence). It’s something I felt, especially when I threw my own mixed English/vernacular submissions. Studies also report this.

- Overreliance on linguistic features: Because it uses perplexity, burstiness, etc., GPTZero depends heavily on statistical features of text. It doesn’t “understand” meaning, context, creativity, irony, tone. So creative writing (poetry, experimental style) or intentional stylings might be misjudged.

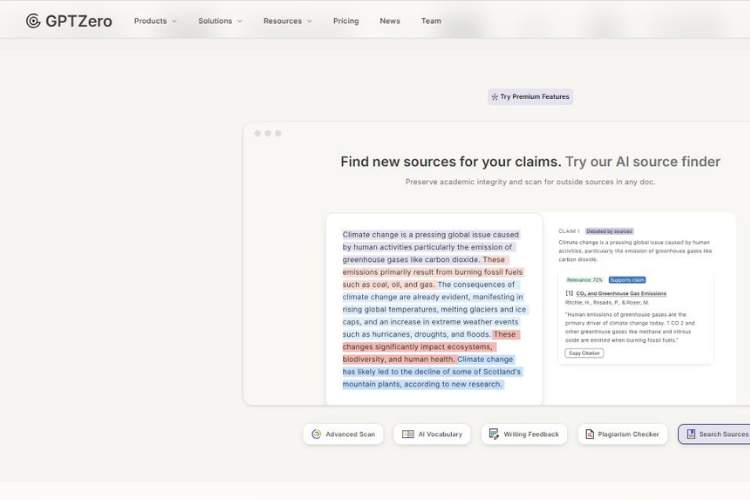

Other Features Worth Knowing

Beyond detection, here are extras I liked and others I found useful (or sometimes missing):

- Sentence-by-sentence scoring: Helps you see which part of your text is triggering alerts. Good for editing.

- “AI vocabulary” highlighting: It flags words/phrases more typical in AI writing; sometimes helpful, though sometimes over-sensitive.

- Plagiarism checking: Some plan tiers integrate or allow you to cross-check for plagiarism. Helps when you want not only to check for AI origin but also for copied content.

- Feedback / writing suggestions: GPTZero sometimes suggests how to “humanize” or soften machine-like text, or improve sections. Useful for writers.

- Pricing & Limits: Free version has scan limits. If you want to check long documents or many documents, or use advanced scans, you’ll need to move to paid plans. For heavy users (teachers grading many essays, editors, companies), cost can add up.

Accuracy Numbers & What Studies Say

Some numbers from my readings and external tests:

- In some tests, GPTZero claims over 99% accuracy on “pure AI-generated content”.

- In academic settings or hybrid content, accuracy is lower — often in range of 80-98% depending on how edited/modified/paraphrased the text is.

- A study on 78 AI-papers vs 50 human papers: most AI ones were flagged accurately (91-100% range), but there were false positives among human texts.

My Personal Take & Advice

Using GPTZero feels a bit like walking a tightrope. On one side: it’s genuinely useful, reducing brain-ache when you need to check text. On the other: you run a risk of unfair labeling unless you know how it works and its limits.

If I were using it (which I have), here are my suggestions for people:

- For educators: use results as guidance, not as verdicts. If a student’s work gets flagged, review manually, ask questions. Let the student explain style rather than assume cheating.

- For writers/creators: if you’re using AI-assisted writing, try editing for variety: mix up sentence lengths, avoid repetitive structures, use personal voice, idioms, irregularities. That helps “humanize” text.

- For tool developers: improving detection of hybrid content and paraphrased AI-text is where the battlefield is. That’s where many tools, including GPTZero, get fuzzy.

Overall Verdict

If I had to rate it, I’d give GPTZero something like this:

| Category | Score out of 10 | Reasoning |

| Ease of Use | 9 | Very approachable, intuitive interface. |

| Detection of Pure AI Text | 9 | Strong performance with raw, unedited AI text. |

| Handling Hybrid / Modified Text | 7 | Decent, but less consistent. |

| False Positive Risk | 6 | Not huge, but real enough to worry in academic settings. |

| Extra Features (feedback, sentence scoring, plagiarism) | 8 | Helpful tools beyond bare detection. |

| Value | 8 | Free plan plus paid tiers; works well especially for educators. |

| Overall | 8 | One of the better detectors, with caveats. Strong, but not infallible. |

Final Thoughts

GPTZero is not flawless, but in my experience it’s among the more reliable AI detection tools available today. It doesn’t claim to be perfect, which helps. When used smartly—knowing its limits, interpreting results with care—it can be a powerful ally.

I believe AI detection is going to keep evolving, and no one tool will ever “solve” it fully. But GPTZero has a solid foundation, and I think its path forward includes better handling of edited and paraphrased texts, reducing false positives, and maybe even contextual understanding (tone, voice, purpose).

Those improvements will make it more trustworthy and less scary for people who get flagged but did nothing wrong.

I’ve been using GPTZero off and on for a while now — throwing human-texts, hybrid texts, polished AI text, even heavily edited ones — trying to see how well it spots what’s real vs what’s generated. Here’s what I found.