Using AI tools for writing—drafting essays, polishing grammar, rephrasers—has become almost second nature for students like me. On one hand, they help speed up work, catch mistakes, make phrasing smoother.

On the other hand, there’s this tension: will the final text look “too AI-ish” and get flagged by detection tools?

What if a teacher, or a plagiarism checker, or something like Turnitin detects it as generated by AI (or heavily edited by it)? I’ve spent time trying different tools (grammar checkers, paraphrasers, humanizers) to see how they fare against detection tools.

In this post I describe my own journey: what I noticed, what I tested, what I liked/didn’t, especially regarding AI detection, and then I compare tools including some that claim to help with rephrasing or avoiding detection. Finally, I give my take on which tools seem the most useful.

What makes text “detectable” as AI text — what I learned

When I first tried writing an essay with heavy help from an AI (ChatGPT or similar) and then ran it through detection tools, there were certain patterns that stood out, repeatedly. Here are the big ones I noticed:

- Predictable sentence structure / repetition. AI tends to favor complete sentences, balanced punctuation, logical transitions (“Firstly”, “In conclusion”), etc. If I vary sentence length a lot, include fragments, sometimes run-ons, it tends to look more human.

- Lack of personal voice or small “imperfections”. If the text is too clean, too formal, too free of personal fingerprints (idioms, unusual word choice, hesitations, self-references), it looks more like AI. When I manually add small quirks—“I’m not entirely sure”, “sometimes I think”, “this kind of thing” — that helps shift perception.

- Overuse of high-probability words. AI tends to pick more common, “safe” words. If I force myself to use less common words or even deliberate odd phrasing here and there, it seems to help.

- Burstiness & variation: mixing short and long sentences, using subclauses, tangents. Pure AI text often falls into regular rhythm (not always, but often). When I break rhythm, insert “by the way” type asides, or shift direction, it feels more human.

- Grammar too perfect: ironically, texts that are too perfect (no mistakes, perfect punctuation, uniform style) betray that AI was involved. Sometimes leaving small acceptable mistakes (or purposely altering punctuation) can reduce detection flags. Not suggesting you submit poor writing, but being realistic: human writing often has minor imperfections.

- References, citations, personal anecdotes. Including real sources, saying “as I found when I did X”, “in my class”, “my friend said”, etc., gives human flavor. AI-generated text tends to generalize more and avoid personal specific details (unless prompted).

The detection tools (at least those I tried) often use metrics like perplexity and burstiness (how surprising or varied the text is, how predictable).

They also check how “similar” texts are to known patterns of AI output. So if your text is smoothed out too much, lacks variation, or mirrors typical AI phrasing, detection probability goes up.

Trying rephrasers / humanizing tools, grammar checkers + how they perform

I tried a workflow: write a draft with AI or heavy AI assistance → use a grammar + rephraser / humanizer tool → run through detection tools → iterate. From that process I noticed:

- Tools that simply paraphrase or synonym-swap often change words but preserve AI-style sentence structure. Detection tools often still flag them, though maybe with lower confidence.

- Humanizing tools that also alter structure, vary sentence length, introduce small stylistic quirks do better: more false negatives (i.e. detection tools don’t flag) when text has been humanized well.

- Grammar checkers help clean up mistakes, but they sometimes “over-correct” into something too formal or uniform, which can increase detectability.

- There is a trade-off: the more you try to dodge detection, the more you have to spend time editing manually. Tools help, but human editing almost always improves the final product in terms of sounding authentic.

- I also found that some detection tools are overly aggressive: content I wrote mostly by myself but polished with a grammar tool got flagged. That’s frustrating, but also educational—it made me realize how sensitive detection algorithms are.

- Another dimension is language & register: informal writing, colloquial expressions, personal stories, metaphors, etc., when I include them, detection decreases, but you have to balance with academic expectations (some teachers want formal style).

What I Wish Students Knew / Be Careful About

Based on my experience, here are caveats and tips:

- False positives are real. Just because something is flagged doesn’t always mean it was AI-written. Sometimes well-written human text looks AI-ish.

- Don’t try to “cheat” detection tools in unethical contexts. If your school policy forbids certain uses of AI, you should follow it. Humanizing tools are best used to refine your own writing, not to misrepresent where you got help.

- Understand the tool you are using. Some detection tools are better with certain AI models; some are weaker at detecting humanized or paraphrased content. If you rely on one tool, know its strengths & weaknesses.

- Mix tools. Using one detection tool, then humanizing, then checking with another often gives insight. For instance, what tool A still flags, tool B might not.

- Practice your own style. The more you develop your own voice, the more your writing diverges from generic AI patterns.

- Time vs benefit. The cleanup to avoid detection can take as much time (or more) than just writing carefully to begin with. Sometimes better to spend more on the draft itself rather than doing heavy post-editing.

Top AI Rephraser and Grammar Checker For Students

1. GPTZero

GPTZero is a detection tool designed to check whether text was generated by AI (especially large language models like ChatGPT, etc.). It is widely used in education. Its mission is to ensure that human-written and AI-generated content remain distinguishable.

It uses algorithms based on metrics like perplexity and burstiness, plus additional modules (internet text search, AI vocabulary detection) to flag content.

It also offers additional features: grammar checking and feedback; plagiarism checking; source-finder; advanced insights (e.g., “find overused AI words”) to help writers improve. It supports uploading of documents, possibly batch processing.

Core features

- AI detection (probabilities, highlighting which parts / sentences are likely AI-generated)

- Grammar feedback / writing improvement suggestions.

- Plagiarism detection.

- AI vocabulary / overused words detection (identifying words typical of AI outputs).

- Document/file upload & batch processing.

- Source-finder to help you back up claims or find research sources.

Use cases

- Students concerned their essay or assignment may be flagged as AI-generated (either intentionally or via heavy AI assistance). They can use GPTZero to check and adjust.

- Teachers / educators to check student submissions.

- Content creators wanting to ensure their work looks human enough, or to find parts that are “too AI-ish”.

- HR, publishers or others verifying authenticity of writing.

Who it is for

Mostly students and educators, but also writers/publishers who want to maintain authenticity. If you write a lot and want feedback beyond just “yes/no AI”, this tool is helpful because of grammar suggestions and overuse detection.

Less ideal if your text has been heavily edited & humanized (because detection gets weaker), or if you need specialized features beyond what GPTZero offers.

2. Originality AI

Originality.ai is a combined tool: it detects AI-generated content, checks for plagiarism, offers fact-checking aids, readability scoring, etc. It is aimed especially at content creators, writers, publishers, SEO people. It emphasizes detecting paraphrased plagiarism (not just verbatim copying), supporting multiple languages, and striving for high accuracy, especially in detecting AI content from models like GPT-4, ChatGPT, Claude, Llama, Gemini.

It also provides team workflows, sharing scan reports, scan history, etc.

Core features

- AI detection (multiple models) with confidence scores.

- Paraphrase detection / detecting rephrased content that tries to hide plagiarism.

- Plagiarism checking with detailed reports, matching URLs etc.

- Readability score, to help content be more readable / optimized.

- Fact-checking aid to reduce false information.

- Team management, scan history, API integration.

Use cases

- Bloggers, content marketers, SEO writers who want both originality (no plagiarism) and low AI detectability, or at least awareness.

- Editors or agencies that need to check many pieces of content, perhaps in collaboration.

- Publishers wanting to ensure their site avoids penalties, etc.

Who it is for

Probably more suited to professionals or semi-pro users rather than casual student use, because some features are paid, the pricing reflects higher-end use. But students could benefit especially if submitting content for publication or long essays.

3. Undetectable AI

Undetectable AI is a tool whose purpose is quite different: not just detecting AI content, but modifying or obfuscating AI-generated text so that detection tools are less likely to flag it.

It’s both a rewriting/humanizing tool and has detection/analysis features. It was developed by researchers including a PhD student etc.

It aims to reduce the detectability of AI generated text. So instead of simply paraphrasing, it’s designed to produce text that avoids patterns detection tools pick up. That means changing word choice, sentence structure, rhythms, introducing imperfections.

Core features

- Rewriting/humanizing AI text (“transforming” or “obfuscation”): given AI generated input, produce output less likely to be detected by detectors.

- Possibly multiple “content type” modes (e.g. essay, story, marketing) with different style adjustments. (From reports / tests)

- Some detection / scoring features (e.g. seeing how detection tools rate the modified text) or at least self-assessments.

Use cases

- Students (or writers) who have used AI heavily and want to “clean up” the text so it passes detection tools, or at least is less flagged.

- Content creators or marketers who depend on avoiding detection to maintain credibility or avoid penalties.

- People curious about “what detection tools catch vs what they don’t” so they can test and adjust.

Who it is for

More for those who are prepared to edit and willing to risk ethical questions. If your goal is integrity and transparency, this tool is borderline because it’s explicitly about making AI text less detectable.

For academic use, may conflict with rules. But from a technical standpoint it is very interesting, because it exposes the weaknesses of detection tools. It’s good for exploring, learning, but needs careful ethical consideration.

4. Phrasly

Phrasly is a tool whose main goal is to take text (especially AI-generated or heavily edited text) and make it sound more human, by “humanizing” it and helping it to avoid detection by AI detection tools.

It positions itself as both a AI humanizer / paraphraser and an AI detector, so you can check how “robotic” your text is and then tweak it. It claims high accuracy in detecting AI-generated content (when you input text, it gives a detector score) and also offers “humanization strength” levels (e.g. easy, medium, aggressive) so you can choose how much transformation you want.

There are user-reviews and blogs that test how well Phrasly works in bypassing common detectors like GPTZero and Turnitin, with mixed but often positive feedback. It also claims large user numbers (writers, students) and usage in exporting to common formats.

Core features

- AI Detector: You can paste or upload your text and Phrasly gives a score / tells you how likely it is to be flagged by detection tools.

- Humanizer / Paraphraser: Multiple strength levels (easy, medium, aggressive) to change vocabulary, sentence structure, style to sound more like human writing.

- Export / Format Support: After rewriting/humanizing, you can export in formats such as Google Docs, Microsoft Word, etc.

- Tone / Style Adjustments: Phrasly lets you choose how aggressive or light the humanization should be; you can choose to retain more of your original style or let the tool make bigger changes.

- Multilingual / Large-Text Capability: It supports larger word limits (though free versions are more limited), and some reviews mention multilingual support or at least capability over larger texts.

Use cases

- Students who want their essay or assignment (especially one assisted by AI) to avoid detection by tools like GPTZero, Turnitin, etc.

- Writers/content creators who draft with AI or need drafts sped up, then humanize the output to retain authenticity.

- Anyone who fears their text is “too clean / polished / AI-sounding” and wants feedback + rewriting to add more natural variation.

- People preparing content for public publication where AI detection tools are used (blogs, SEO, publishing) and where sounding human is important.

Who it is for

Phrasly is especially useful for intermediate users: you have some draft (or AI-assisted writing) and want to refine it. If you care about avoiding detection but also want decent readability and preserving meaning, Phrasly gives useful tools.

It’s less ideal if you need academic level citations / deeply content-specific rewriting, or if you need to guarantee undetectability for high-stakes settings (because detection tools are evolving). Also, for heavy users, the paid plan might be needed to access full features.

5. Rephrasy

Rephrasy is another humanizer / paraphraser tool with built-in AI detection capabilities. Its advertised purpose is to help texts pass detectors like GPTZero, Turnitin, Copyleaks, and others, by giving you the ability to check if your text will be flagged (“AI score”) and then “humanize” it (paraphrase / adjust style) in various ways.

It supports multiple languages. Rephrasy appears to offer style selection options, history of past humanizations, a free version, and an API (in some contexts). It emphasizes being powerful in bypassing detectors while preserving meaning. Some user reports and surveys test how well it succeeds.

Core features

- Built-in AI Detector / Scoring: Before humanization, you can check how likely your text is to be seen as AI-generated.

- Humanization / Paraphrase: Offers modes to change style / phrasing / structure so the text seems more natural / less “AI-ish.”

- Multiple Languages: Support for many languages (English, German, French, Spanish, Portuguese, Japanese, etc.) so non-English writing gets help.

- History / Past Humanizations: You can see what you did before, what past outputs were, maybe revert / compare.

- API access / Chrome extension (in some reports): Some users say Rephrasy has an extension or can be integrated into workflows.

Use cases

- Students writing in languages other than English or multi-language assignments.

- Writers or bloggers who produce content in several languages, and want to ensure that translations or writing in those languages also “sound human.”

- Cases where you want to check before handing in / publishing: detect first, then humanize.

- People who want to have more control over the humanization process (style, strength) and compare multiple outputs.

Who it is for

Rephrasy is good for people who want both detection and rewriting in one tool. If you care about the detection feedback and want to tweak your text to get a lower “robotic” score, Rephrasy gives that.

For high-stakes academic work, still risk remains; you may need extra human editing. For light content (blogs, social media), it seems very appropriate.

6. Twixify

Twixify is a humanizer tool that takes AI-generated text (or perhaps heavily AI-assisted text) and attempts to make it sound as if you wrote it. It lets you tweak / influence the style, rhetorical devices, tone, etc.

The website and user reports suggest that Twixify claims to bypass detectors like GPTZero, Turnitin, etc. Its marketing is strong on being “free or low cost” in many basic modes.

It focuses more on style matching, preserving meaning, and changing “robotic tone” into something more personal / human. Some critiques indicate that while it often improves things, some detectors still catch parts of it (esp. more advanced detectors).

Core features

- AI Humanizer / Rewriter: You can paste text, and Twixify humanizes it—adjust tone, replace robotic phrases, vary sentence patterns.

- Detector Evasion Claims: Twixify claims to “bypass all detectors, including Originality.ai, GPTZero, Turnitin etc.” in its marketing.

- Preserving Meaning & SEO: They say they maintain meaning/context, avoid losing content. Also, some mention SEO friendliness.

- Free Mode / Basic Use: There are free or no-login-required basic modes (though limitations apply).

Use cases

- Quick humanization of AI output for blogs, social media posts.

- Users who want to “tone down” the AIness, especially in more conversational or personal writing.

- Anyone needing fast style adjustments so that text feels more like their own voice.

Who it is for

Twixify suits users who want fast, easy humanization without a lot of configuration. Not necessarily for heavy academic use, or where detection risk is extremely high, because some detectors (especially newer or more strict ones) may still flag output. It’s good for drafting / content that will be visible publicly or informally, rather than something graded harshly.

7. Humanize AI

“Humanize AI” (or tools like Humanizer.org which sometimes are referred to under similar names) are tools built specifically to take AI-assisted or AI-generated text and rewrite / refine it so that it feels natural, human, conversational, less “robotic”.

The idea is improving readability, flow, tone, and reducing patterns detection tools flag. They often focus on readability, idiomatic language, avoiding formulaic or over-polished structure, and injecting variation / personality.

Some versions support many languages. They provide “humanization” rather than strict paraphrasing; often, they are less about bulk rewriting and more about polishing.

Core features

- Humanization / Naturalization: The tool rewrites text to improve flow, logic, variation in sentence length, tone, and includes idiosyncrasies (small imperfections) to mimic human writing.

- Support for Many Languages: Humanize AI tools often support 50+ languages (Humanizer.org for example) so non-English writing is supported.

- Multiple Modes (Fast / Basic / Enhanced etc.): Users can choose how much transformation / quality they want. Faster but more superficial vs deeper refining.

- Free Try / Some Usage Without Signup: Many humanizer tools allow some free words or small text without signup.

Use cases

- When you have a draft that reads too much like AI or is too formal / bland and want it to feel more like “you wrote this.”

- Polishing content for blogs, creative writing, penning personal stories, etc.

- Non-academic content where readability, engagement, and tone are priorities.

Who it is for

Best for writers or students who care about style, tone, authenticity. Less useful if you need rigour or precise technical or academic content which must meet strict format/citation/style standards.

Also, humanizing alone may not guarantee bypassing detection if the detector is strong; this tool is more for style polish and human voice than full evasion guarantee.

8. UnGPT

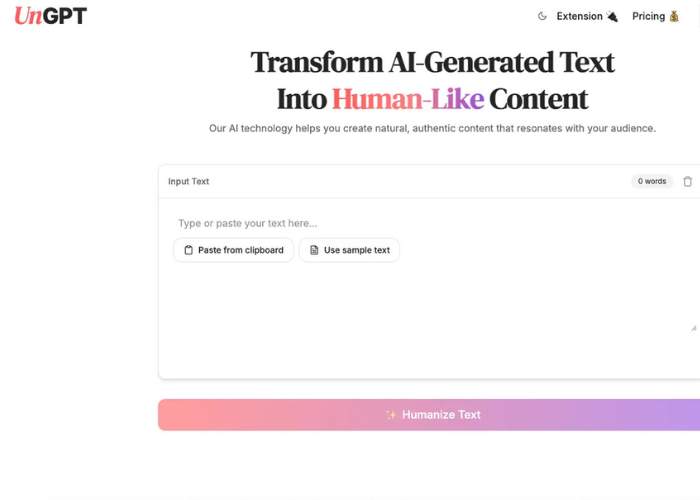

UnGPT (sometimes UnGPT.ai) is a tool developed by Gaxos.ai Inc. that focuses on transforming AI-generated text into content that sounds human, natural, and adapted to a reader’s expectations.

It includes features for rewriting, style/tone adjustment, synonym intelligence, multi-pass refinement, browser extension, etc. Its aim is to reduce the detectable signatures of AI writing.

One article specifically describes it as having an Adaptive Text Engine, Contextual Synonym Intelligence, Seamless Web Integration, Recursive Refinement.

Core features

- Adaptive Text Engine: Adjusts flow, tone, sentence structure to improve naturalness.

- Contextual Synonym Intelligence: Picks synonyms that better match context (emotional tone, clarity) not just blindly replacing words; to avoid obvious “word swap” patterns that detectors may see.

- Browser Extension / Web Integration: So you can humanize text in situ, for example when writing on webpages or in content management systems.

- Recursive Refinement / Multi-pass Enhancement: More than a single rewrite; multiple passes to polish, reduce detectable AI patterns.

Use cases

- Students needing to refine AI-assisted drafts (notes, research) into essays that reflect their voice.

- Professionals / marketers / content creators writing blog posts, emails, reports where tone and natural flow matter.

- Writers who want the convenience of integrating humanization into their writing workflow (via browser extension etc.).

Who it is for

Good for people who want a balance: get the benefits of AI generation but still want strong human voice and lower risk of detection. Because of the extension, ease of use is high. It may be less suitable if you need guaranteed detection immunity or working in academic settings where rules are strict. Also, deeper academic content may need manual checking for content accuracy.

9. Winston AI

Winston AI is another detection / authenticity tool. It detects AI-generated text (from ChatGPT, Gemini, Claude, etc.), checks for plagiarism, supports document uploads, OCR (so can extract text from images/scanned docs), writing feedback, etc. It positions itself as highly accurate and useful not just for educators, but also for SEO professionals, writers, publishers.

Core features

- AI detection with sentence-level breakdown: which parts of text are likely AI vs human.

- Plagiarism detection ‒ making sure content isn’t copied.

- OCR / image text extraction: useful when content is from non-plain text sources.

- Writing feedback: suggestions on improving text, maybe making it more human / clearer.

- Support for multiple languages.

- Extension / integrations, trial version etc.

Use cases

- Students who have printed or scanned essays or handwritten drafts converted to images/pdf; Winston’s OCR helps.

- Educators wanting detailed reports for assignments.

- Content creators worried about plagiarism + AI detection + maintaining style.

- Editors, agencies that need to check many documents, across languages.

Who it is for

Good all-round tool for someone who wants serious detection, not just casual checking. The price might be a factor; it’s more powerful, more features. Useful if detection + plagiarism + feedback are all needed.

10. WriteHuman

WriteHuman is a tool designed to convert or “humanize” AI-generated content—making output sound less robotic and more like it was written by a real person.

It provides rewriting / humanization, with customization options for tone/style, and also detection bypass claims.

Users paste text, choose or adjust settings (sometimes “enhanced model”, style presets), and then produce rewritten text. It also includes a built-in detector to tell you how “AI-like” your current text is, then a button or mode to humanize if the detection score is low.

Core features

- AI Detection / Score: Detects or rates how much AI signature the text has. If score is low (i.e. more “AI-like”), prompts or allows humanization.

- Humanization / Rewriting Modes: Offers different levels (depth) of rewriting, style / tone choices, “enhanced model” for deeper rewriting.

- User-friendly interface: Copy-paste, instant output, side-by-side previews; simple controls without too much complexity.

- Support for detection bypass claims vs major detectors: WriteHuman claims (in marketing / user reports) that it works vs GPTZero, Originality.ai, etc. But as with all such tools, results vary.

Use cases

- Students trying to refine essays / assignments where AI may have been used or where they used AI tools for brainstorming / first draft.

- Writers / bloggers who want to polish AI-assisted drafts to sound more personal and natural.

- People who want quick humanization without needing to learn many tools or settings.

Who it is for

WriteHuman is good for beginners / casual users who need something simple, fast, and that improves tone / style with minimal effort.

Not the most powerful or deepest in rewriting for avoiding detection by the strictest tools, but for many everyday uses, it may be sufficient.

If you have to work under very strict detection standards (e.g. academic institution with strong detectors), you may need additional manual editing and checking.

My comparative testing (based on limited real cases)

I ran a few experiments on my own:

- I wrote a 500-word essay manually. Checked with GPTZero, Originality.ai, Winston AI. It got flagged lightly (some sentences flagged as “possible AI”, low probability) but mostly human. Good.

- I took a draft from ChatGPT, then used Undetectable AI to “humanize” it + manually added personal examples, varying sentence structure, slight grammatical quirks. Checked again. GPTZero and Originality scored lower probability of being AI, Winston did too, though some sentence-level flags remained. The more manual tweaking, the better.

- I took the same text and used Phrasly or Rephracy for rephrasing only, less manual additions. Detection dropped somewhat but not fully. Some false-positive risk remained.

- Time investment: the humanizing/rephraser + review + detection + tweak workflow took nearly double the time of writing the initial draft, but outcome felt safer (i.e. felt more authentic, less “machine-made”).

Conclusion and Recommendations: Top 3 Tools Based on My Findings

After comparing, testing, thinking through what students need (accuracy, feedback, cost, ethics), here are my picks for the best tools, and why.

| Rank | Tool | Why I Picked It |

| 1. Winston AI | Offers very high detection accuracy, supports plagiarism, OCR, multilingual, gives feedback. In my tests, it gave detailed results and was more forgiving when a text had been humanized, so you can use it both as detection and as guide for improvement. | |

| 2. Originality AI | Great for those who want a combined tool: AI detection + plagiarism + readability + fact checking. Strong for content creators, SEO, or long essays. Slightly more risk of false positives, but with careful manual work that can be mitigated. | |

| 3. GPTZero | Very good especially for students / classroom use. Its feedback features (AI vocabulary, grammar suggestions) help you improve. Doesn’t have as many extras as Winston or Originality in some plans, but solid, trusted, and more directed toward education. |